A couple of days before Christmas, the CEO of our company sent an email to all the staff asking for someone to research a certain topic. I was immediately reminded of an academic paper I wrote a while back that covered the volunteer’s dilemma (VD). Our CEO’s request provided an excellent opportunity to study the VD in real life.

The VD models a situation where exactly one out of several people needs to volunteer for a certain task, and any further volunteers will not improve the outcome. The most interesting result demonstrated by the VD is that as the number of potential volunteers increases, not only does the individual probability that each one of them volunteers decrease, which is not very surprising, but that the global probability that anyone volunteers will go down too – a feature that psychology calls diffusion of responsibility. If you have an accident, for example, it is better not to have too many people around, as with each additional person, the chances that someone will call an ambulance actually drop.

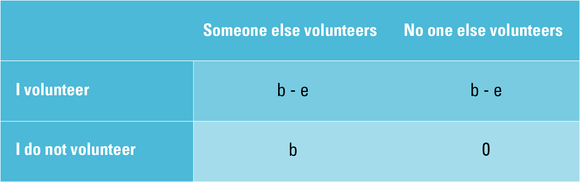

Let’s look at the model: if someone finishes the task, the boss will be happy, and that’s good for the whole company, so everyone benefits (b). If no one does it, the boss will be very unhappy and might even cancel the company Christmas dinner, which is really not good and will lead to an outcome of zero.

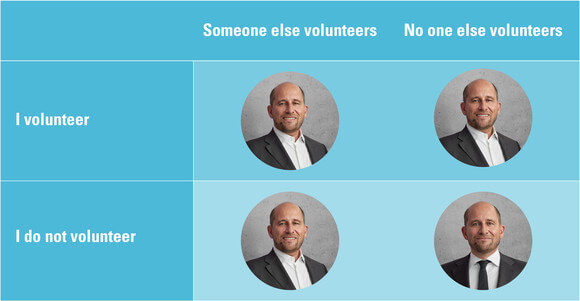

The different states of our CEO.

However, everyone would obviously prefer that someone else does the task because it takes time and effort (e) that could be spent on something else. However, we assume that b is larger than e, so if no one else is willing to do the task, I would prefer to do it myself.

My outcomes in different states of the world.

Obviously, the ideal solution (and the pure Nash equilibrium) is reached if exactly one person volunteers. Any further volunteers will not increase the benefits but will increase the overall costs, while finding no volunteer at all is the worst possible outcome for everyone. However, as this is a coordination game, the ideal outcome is hard to establish, so we resort to looking at the symmetric mixed strategy equilibrium. In a game with n players, we see that the probability for each player not volunteering is q*=(e/b)^(1/(n-1)). It follows that the probability of no one volunteering is q*n=(e/b)^(n/(n-1)). Since (e/b)<1, the more people are involved (i.e. the higher n), the higher q*n, i.e. the higher the chances of no-one volunteering.

Because it was almost Christmas, there were only 40 people in the office when our boss sent the email. Eight of them, or 1/5, did the research and replied, so assuming everyone decided simultaneously and independently then q*=4/5. From this we can calculate the ratio between effort and benefit as 1:6018. Obviously, we care a lot about our boss (or about the Christmas dinner).1

Now let me give you some science-based life advice on dealing with this sort of situation.

Besides the symmetric mixed strategy equilibrium, this game also has asymmetric mixed strategy equilibria in which some players choose the pure strategy of not volunteering while others mix between strategies. These equilibria become very interesting once we introduce communication, which is what we did in our paper.

If I can communicate, I have two basic options: I can tell everyone I will volunteer, or I can tell everyone I will not.2 If I tell everyone else I will volunteer, I expect them not to volunteer, so we will end up in the pure Nash equilibrium and everyone will be happy, although I will be a little less happy than everybody else as I will have to put in overtime. If I tell everyone I will not volunteer, everyone except myself will play mixed strategies.

So what should I do? If I choose to volunteer and announce this upfront, I will get an outcome of (b – e). If I don’t say anything at all and everyone including myself plays the mixed strategy equilibrium, I will also get an expected outcome of (b – e), because by definition, I must be indifferent between volunteering and not volunteering. But, if I tell everyone I will not volunteer, my payoff will increase!

Because everyone else knows I will not be part of the mixed strategy group, they will volunteer with a higher probability. Remember what we said about diffusion of responsibility? The higher the number of potential volunteers, the lower the volunteering probability both individually and collectively, and obviously, vice versa. By telling everyone I will not volunteer, I am simply reducing the number of potential volunteers. Therefore, the overall probability that there is at least one volunteer goes up, and so does my expected outcome and the overall efficiency.3

So, whenever your boss sends an email to everyone asking for something, hit reply all and write “I won’t do it. Bye!” This will generate the best outcome for you and raise the chances that someone will do it, so in effect, you are doing your boss a favour.4

1 I am aware that I am disregarding lots of factors here, e.g. the additional benefit in terms of career prospects that being a volunteer might have, and that a sample size of one could be insufficient to accurately estimate probabilities. Please do not take this part of the post too seriously.

2 Both messages are cheap talk, but in our paper we explain why they are credible, and we also show experimentally that people indeed believe the messages and act accordingly.

3 Although, from a collective point of view, it would obviously be even better if I announce that I will volunteer and follow through.

4 It might be a good idea to reference this post though, so everyone understands what you are doing is for their benefit.

SOURCES:

Feldhaus, C., & Stauf, J. (2016). More than words: The effects of cheap talk in a volunteer’s dilemma. Experimental Economics, 19(2), 342–359.